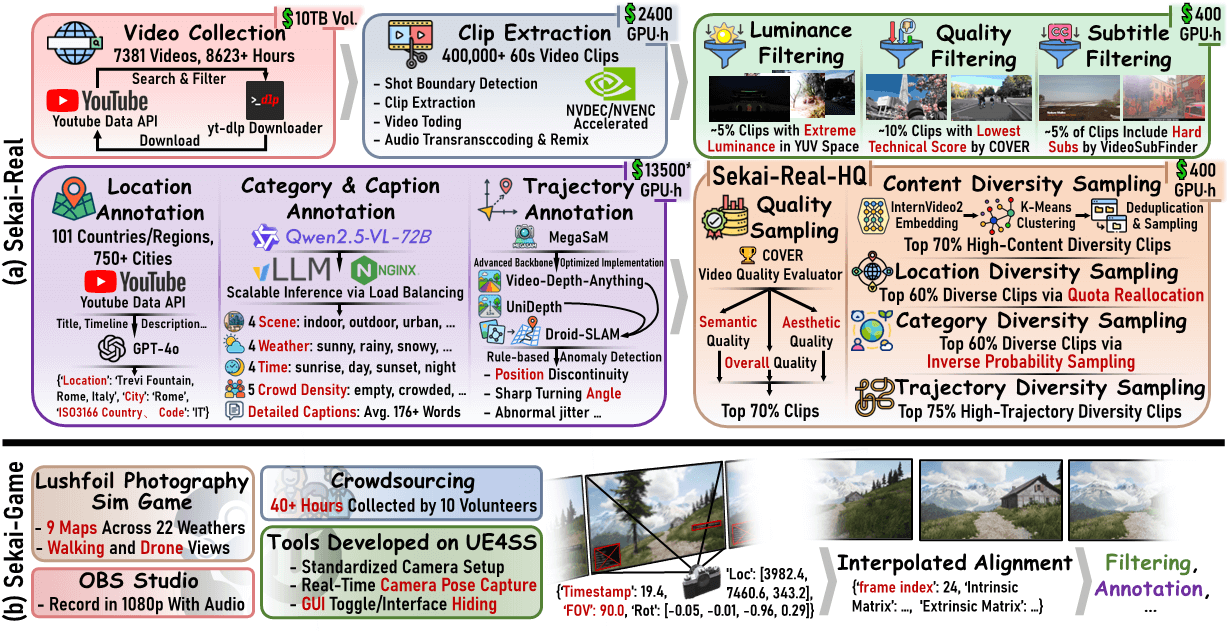

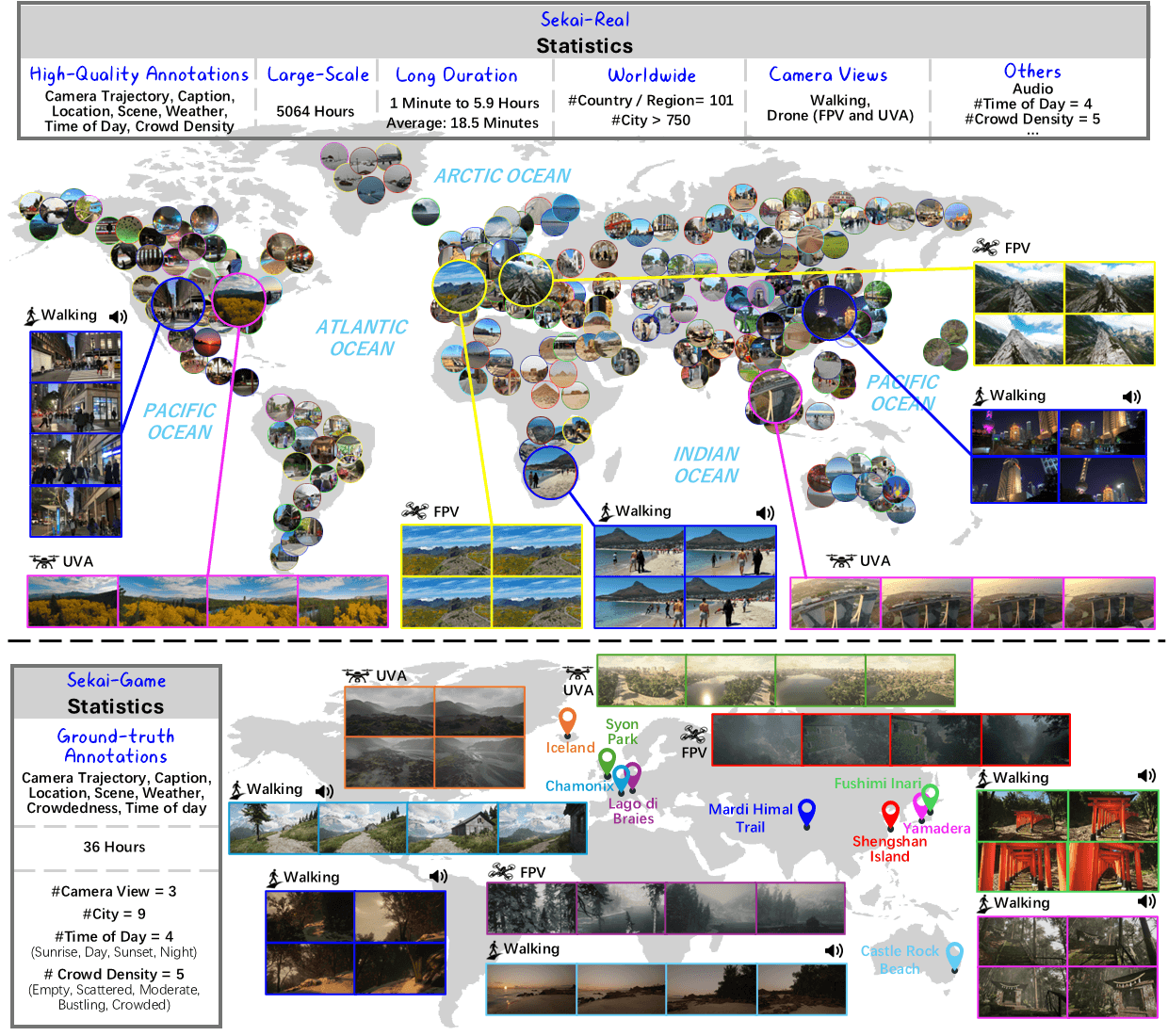

In this paper, we introduce Sekai (せかい, meaning "world" in Japanese), a high-quality egocentric worldwide video dataset for world exploration. Most videos contain audio for an immersive world generation. It also benefits other applications, such as video understanding, navigation, and video-audio co-generation. Sekai-Real comprises over 5000 hours of videos collected from YouTube with high-quality annotations. Sekai-Game comprises videos from a realistic video game, with ground-truth annotations. It has five distinct features:

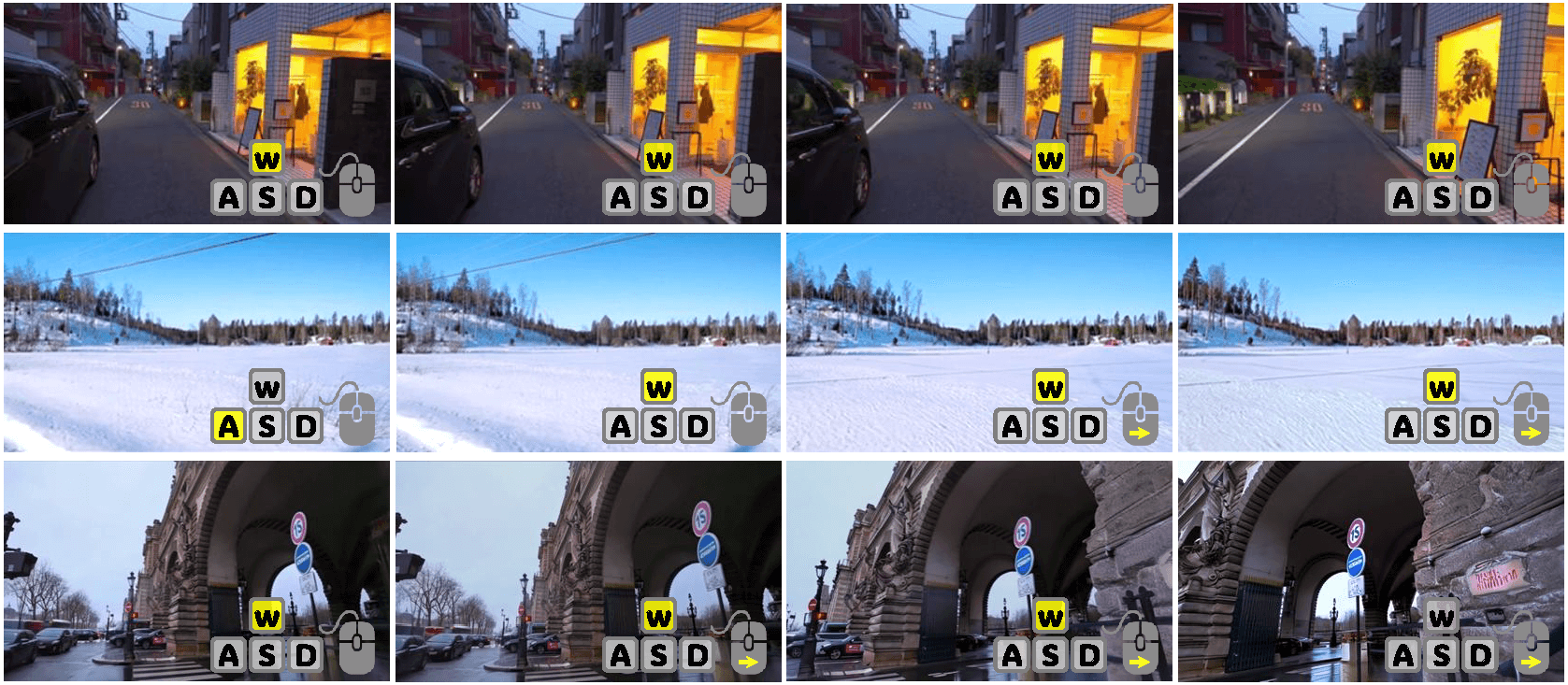

1. High-quality and diverse video. All videos are recorded in 720p, featuring diverse weather, various times, and dynamic scenes.

2. Worldwide location. Videos span 100 countries and regions, showcasing 750+ cities with diverse cultures, activities, and landscapes.

3. Walking and drone view. Beyond walking videos, Seikai includes drone view (FPV and UAV) videos for unrestricted world exploration.

4. Long duration. All walking videos are at least 60 seconds long, ensuring real-world, long-term world exploration.

5. Rich annotations. All videos are annotated with location, scene, weather, crowd density, captions, and camera trajectories. YouTube videos' annotations are of high quality, while annotations from the game are considered ground truth.